What is Google “Top Stories” carousel?

Table of Contents

Appearing on Google‘s Top Stories carousel is a great way to get exposure for news content and boost organic web traffic. However, Google is not providing much data (clicks, impressions etc.) that you can receive from this carousel.

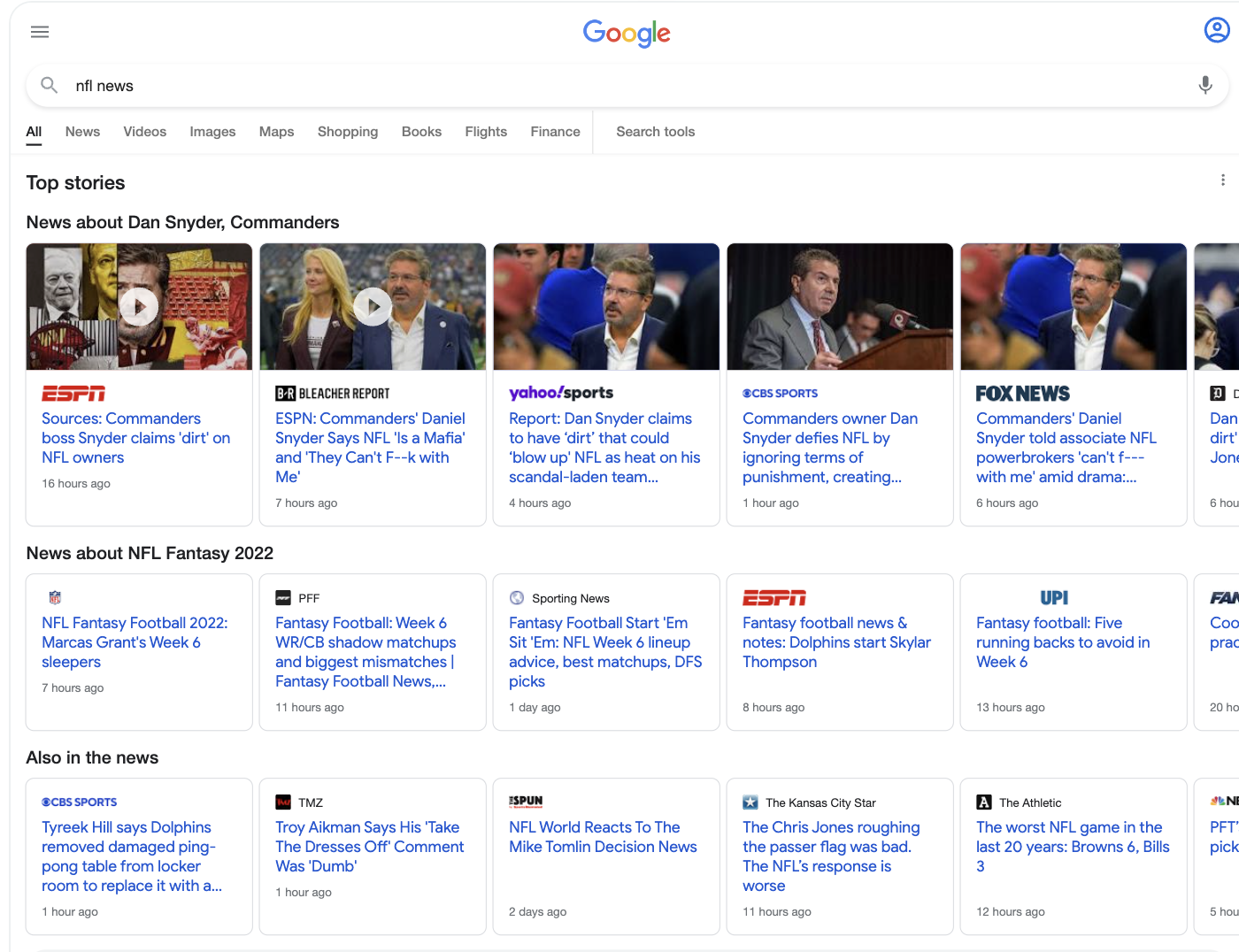

The Top Stories carousel is an AI–powered search engine results page (SERP) feature that displays useful and timely articles from a broad range of high–quality and trustworthy news providers.

What Python libraries do you need?

!pip install requests import requests import json import pandas as pd

How to set up the parameters for the request?

To scrape the “Top Stories” carousel you need to create a free registration in SEPRapi which provides 100 free requests per month.

When you create the registration you should copy the API Key and paste into the script.

Also, they are providing a really nice feature SERPapi Playground where you can adjust your parameters based on your needs.

Current example is with the following parameters:

- Search engine – Google

- Query – “nfl news”

- Location – New York

- Domain – Google.com

- Language – English

- Region – US

- Device – Mobile

params = {

"engine": "google",

'q':'nfl predictions',

"location_requested": "New York, NY, United States",

"location_used": "New York,NY,United States",

"google_domain": "google.com",

"hl": "en",

"gl": "us",

"device": "mobile",

"api_key":"API KEY"

}

Sending the request to SERPapi

In a variable “response” you can store the response from the API. From the library, “request” and the function get we can send the request with the concatenate parameters.

response = requests.get("https://serpapi.com/search.json?", params).json()

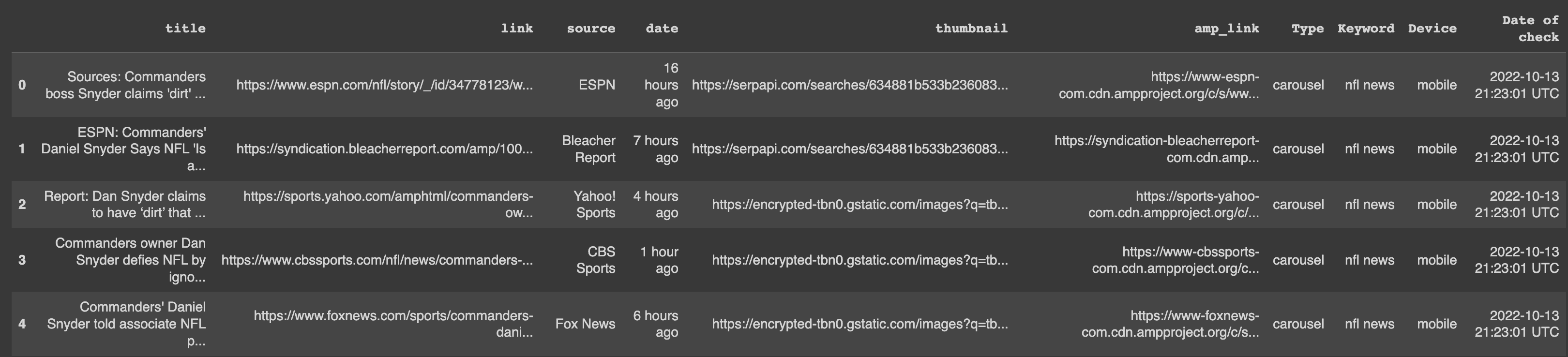

Storing the API response in a Pandas DataFrame

The first step should be to filter from the whole API response, which contains the information from all SEPR (Search Engine Result Page) and to store only the information from “Top Stories” in a variable.

As you may know, on a mobile device, there is more than one carouse with news. That’s why in a second variable we can add only the “Top Stories” carousel in Pandas DataFrame.

top_t = response['top_stories'] carousel = pd.DataFrame(top_t['carousel'])

Adding more data into the data frame from the API

- In the first variable, I added the query from the API response and then added the value in the column “Keyword”

- The second variable is the date and hour of the check in the column “Date of check”

- And the third one is the what is the device

kwrd = response['search_parameters']['q'] device = response['search_parameters']['device'] date = response["search_metadata"]['processed_at'] carousel['Keyword'] = kwrd carousel['Device'] = device carousel['Date of check'] = date

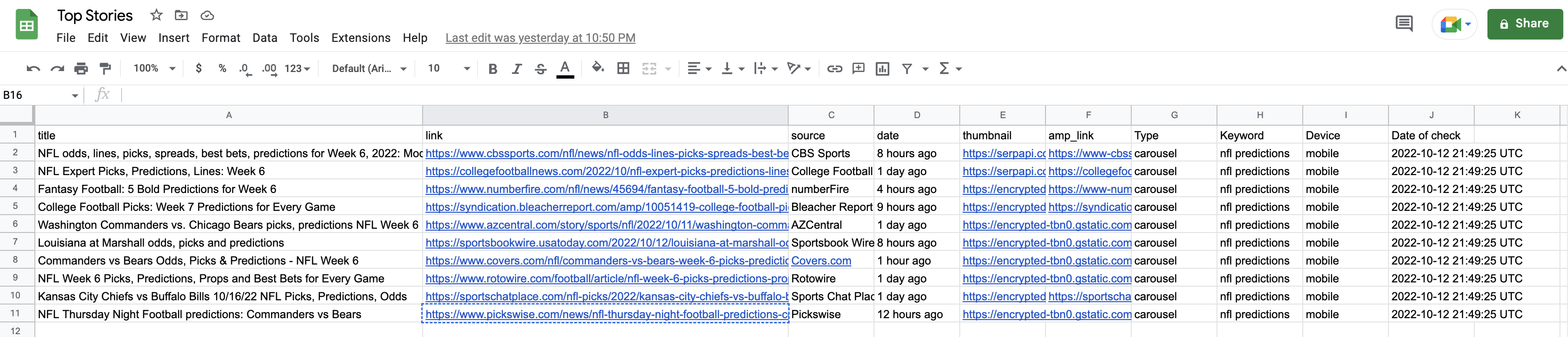

Exporting the data into a Google Sheets document.

This is a standard piece of code that you can use to export a dataFrame into a Google Sheets file.

from google.colab import auth auth.authenticate_user() import gspread from google.auth import default creds, _ = default() gc = gspread.authorize(creds) doc_name = 'Top Stories' from gspread_dataframe import get_as_dataframe, set_with_dataframe sh = gc.create(doc_name) worksheet = gc.open(doc_name).sheet1 set_with_dataframe(worksheet, carousel)

Exported data from the “Top Stories” carousel to Google Sheets

That is how should look your exported dataFrame with all needed values from the API.

Additionally, you can find this simple script in GitHub and also on Google Collaborator

- Paywall and SEO: Types and Implementation Guide - November 1, 2023

- Visualising CTR with Python and Linear Regression - June 3, 2023

- Regex for SEO: Quick Guide on Using Regular Expressions - June 2, 2023